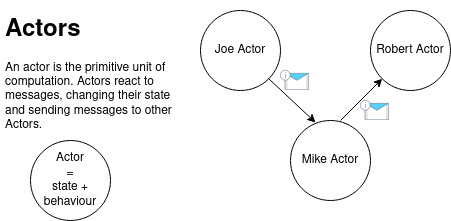

The Actor Model, introduced by Carl Hewitt in 1973, is a conceptual framework for dealing with concurrent computation. Unlike traditional models that rely on shared state and locks, the Actor Model provides a highly modular and scalable approach to designing systems, making it particularly well-suited for distributed and concurrent applications.

At its core, the Actor Model revolves around the concept of "actors"—independent entities that encapsulate state and behavior. Each actor can:

- Receive messages from other actors.

- Process these messages using its internal behavior.

- Send messages to other actors.

- Create new actors.

This model inherently avoids issues like race conditions and deadlocks by ensuring that each actor processes only one message at a time. Consequently, the state is modified in a thread-safe manner, as actors do not share state directly but communicate exclusively through message passing.

Key Benefits

Concurrency and Scalability: Since actors operate independently, systems based on the Actor Model can easily scale across multiple processors or machines. This makes it ideal for cloud-native applications and services that demand high concurrency.

Fault Tolerance: Actors can be designed to monitor and manage other actors, allowing for robust error handling and recovery strategies. This self-healing capability is a cornerstone of resilient system design.

Modularity: Actors encapsulate state and behavior, promoting a modular structure that is easier to reason about, test, and maintain. This separation of concerns aligns well with microservices architecture, where each service can be seen as an actor or some group of actors.

Using the Actor Model to Build Business Applications

In the realm of business applications, the Actor Model can be employed to create robust, scalable, and maintainable systems. Here’s how it can be leveraged:

Order Processing Systems: In e-commerce platforms, each order can be represented as an actor. The order actor can handle various stages of the order lifecycle, including validation, payment processing, inventory adjustment, and shipping. By encapsulating these processes within an actor, the system can handle a large number of orders concurrently without interference.

Customer Service Applications: Each customer session can be modeled as an actor, managing individual interactions and maintaining state throughout the session. This is particularly useful in chatbots and automated customer service systems where maintaining context and state consistency is crucial.

Financial Systems: In banking applications, transactions can be modeled as actors. This allows for secure, concurrent processing of transactions, ensuring that the system can handle multiple operations simultaneously without risking data corruption.

Game Applications: In multiplayer online games, actors can represent various entities such as players, non-player characters (NPCs), items, and game rooms. Each actor encapsulates its state and behavior, interacting through messages to create a dynamic and responsive game world.

Architectural Decisions Influenced by Actor Model Constraints

Implementing the Actor Model in business applications necessitates careful architectural planning due to its unique constraints and capabilities:

State Management: Actors maintain their state internally and do not share state. This requires designing the system in such a way that all necessary information is passed through messages. This may involve breaking down complex processes into smaller, message-driven interactions.

Message Passing: Since actors communicate solely through asynchronous messages, it’s essential to design a robust messaging infrastructure. This includes choosing appropriate messaging protocols, ensuring message delivery guarantees (e.g., at-least-once, at-most-once, or exactly-once delivery), and handling message serialization/deserialization.

Error Handling and Supervision: Actors can supervise other actors, which means designing a supervision strategy is crucial. This involves defining how actors should respond to failures—whether they should restart, escalate the error, or stop entirely. Effective supervision trees help in building resilient applications.

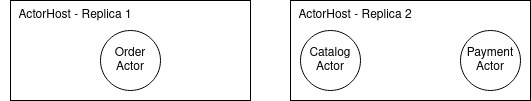

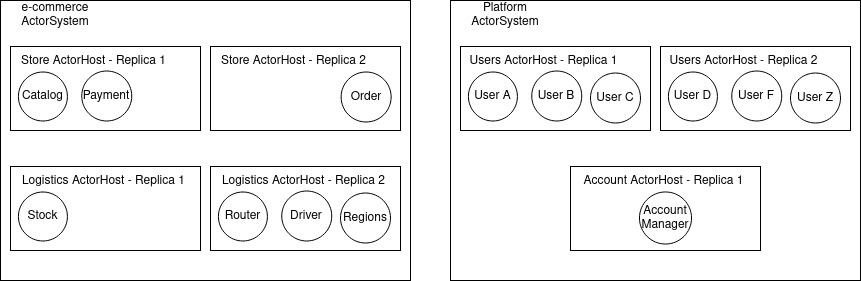

Distributed Coordination: In distributed systems, coordinating actors across multiple nodes can be challenging. Architectural decisions should account for network partitions, latency, and data consistency. Utilizing Spawn, Akka, Orleans, Dapr, Kalix frameworks or using languages like Erlang, Elixir, Ponny or Gleam can simplify these aspects by providing built-in support for distributed actor systems.

Scalability Considerations: Scaling an actor-based system requires monitoring actor workloads and ensuring that actors are efficiently distributed across available resources. Load balancing strategies and dynamic actor creation/destruction policies need to be defined to maintain optimal performance.

Granularity of Actors and Its Impact

One critical architectural decision in the Actor Model is determining the granularity of actors. Granularity refers to the size and number of actors within the system, affecting both performance and maintainability.

Fine-Grained vs. Coarse-Grained Actors

Fine-Grained Actors: In this approach, actors are small, representing very specific tasks or entities. For example, each item in an inventory system might be an individual actor.

- Pros: High concurrency, fine control over individual components, easier to scale specific parts of the system.

- Cons: Higher overhead due to the increased number of actors, potential performance issues due to frequent message passing, complex actor management (This can be mitigated if you use a native actor runtime like Spawn or other Erlang derivatives).

Coarse-Grained Actors: Here, actors represent larger aggregates, such as an entire inventory or order processing system.

- Pros: Reduced overhead, fewer messages, simpler management.

- Cons: Reduced concurrency, potential bottlenecks if a single actor becomes overwhelmed, more complex internal state management.

Managing Granularity

To effectively manage granularity, consider the following strategies:

Profiling and Performance Testing: Continuously monitor the performance of your actor system. Identify bottlenecks and adjust the granularity accordingly. Profiling tools specific to actor systems, such as those available in Spawn, can help pinpoint performance issues.

Dynamic Actor Creation: Use patterns like actor hierarchies and dynamic actor creation to balance the load. For instance, a coarse-grained actor can create fine-grained child actors to handle specific tasks when needed, thus balancing load dynamically.

State Partitioning: Partition state across multiple actors where applicable. In a fine-grained system, ensure that each actor holds only the necessary state for its specific responsibility, reducing the overhead of managing large states.

Batch Processing: In some cases, batch processing within actors can reduce the message-passing overhead. Group related tasks and process them in batches within a single actor, which can be particularly useful in coarse-grained systems.

Modeling Actors as Business Entities

In business applications, actors can be modeled as business entities to better align with real-world processes and operations. Here’s how to effectively model actors as business entities like users, transactions, and more:

User Actors: Each user can be represented as an actor, encapsulating the user's state, preferences, and actions. This actor can handle messages related to user authentication, profile updates, and interaction with other parts of the system.

- Example: In a social media platform, a user actor might manage friend requests, message notifications, and content creation.

Transaction Actors: Transactions in financial systems can be modeled as actors to ensure secure and isolated processing. Each transaction actor handles the entire lifecycle of a transaction, from initiation to completion, including rollback in case of failures.

- Example: In a banking application, a transaction actor can manage the transfer of funds between accounts, ensuring consistency and integrity.

Order Actors: For e-commerce platforms, orders can be represented as actors. Each order actor manages the stages of the order lifecycle, such as validation, payment processing, inventory update, and shipping.

- Example: An order actor can interact with inventory actors to check stock availability and with payment actors to process transactions.

Game Entities: In a game application, actors can represent various game entities such as players, NPCs, items, and game rooms. Each game entity actor manages its state and behavior, interacting through messages to create a dynamic and interactive game environment.

- Example: In a multiplayer online game, player actors manage individual player states, NPC actors handle non-player character behavior, and item actors represent in-game objects like weapons and power-ups.

Actor Model and Architectural Patterns

Actors can be used to implement various architectural patterns, enhancing the robustness and scalability of business applications:

Saga Pattern: The Saga pattern is used to manage long-running transactions by breaking them into a series of smaller, isolated transactions that can be individually committed or compensated. Actors are well-suited for implementing the Saga pattern due to their message-passing capabilities and independent state management.

- Implementation: Each step of a saga can be an actor, with a coordinator actor managing the overall process. The coordinator sends messages to initiate each step and handles compensation actions in case of failures.

Event Sourcing: Actors can be used to implement event sourcing, where changes to the state are stored as a sequence of events. Each actor can manage its event log, ensuring that the state can be reconstructed by replaying events.

- Implementation: An order actor, for instance, can log events such as "OrderPlaced," "PaymentProcessed," and "OrderShipped." Replaying these events can reconstruct the current state of the order.

CQRS (Command Query Responsibility Segregation): In a CQRS architecture, actors can handle commands (state-changing operations) and queries (read operations) separately, improving scalability and performance.

- Implementation: Command actors handle operations that modify the state, while query actors provide read-only views of the state. This separation can enhance performance by allowing the system to scale read and write operations independently.

State Machines: Actors can represent state machines, where each state of the actor corresponds to a different behavior. This is particularly useful in scenarios where an entity has a well-defined lifecycle with distinct states.

- Implementation: Consider a user registration process. The user actor can have states like "New," "Email Sent," "Verified," and "Active." Each state defines how the actor responds to messages, enabling clear and maintainable transitions.

Conclusion

The Actor Model offers a robust paradigm for building scalable, resilient, and maintainable software systems. By embracing message-passing and encapsulating state within actors, developers can address the complexities of concurrency and distribution effectively. In business applications, this model can streamline processes such as order handling, customer interactions, financial transactions, and game interactions. The granularity of actors significantly impacts system performance and maintainability, requiring careful management through profiling, dynamic actor creation, state partitioning, and batch processing. Additionally, actors can be modeled as business entities, aligning system design with real-world processes. By implementing architectural patterns like Sagas, Event Sourcing, CQRS, and State Machines, the Actor Model can enhance the robustness and scalability of a wide range of applications. Additionally, if you use Spawn, you are sure that it facilitates the implementation of several of the use cases presented here since it was completely developed with the aim of facilitating the creation of business applications.

In future posts we will talk more about how Spawn can be essential in developing real-world applications. These are exciting times for actor serverless computing. Happy coding! 🚀✨